Weather Forecasting without the difficult bits¶

Modern weather-forecast models are amazing: amazingly powerful, amazingly accurate - amazingly complicated, amazingly expensive to run and to develop, amazingly difficult to use and to experiment with. Quite often, I’d rather have something less amazing, but much faster and easier to use. Modern machine learning methods offer sophisticated statistical approximators even to very complex systems like the weather, and we now have hundreds of years of reanalysis output to train them on. How good a model can we build without using any physics, dynamics, chemistry, etc. at all?

My ambition here is to build a General Circulation Model (GCM) using only machine learning (ML) methods - no hand-specified physics, dynamics, chemistry etc. at all. To that end I’m going to take an existing model, and train a machine learning system to emulate it - the ML version will be less capable as a model of the atmosphere, but should make up for this by being much faster, and easier to use. A previous experiment along these lines had limited success but did suggest that good results were possible given the right model architecture.

It would be nice to use the Met Office UM (see video above) as the model to be emulated, but for a first attempt I’m going to pick an easier target:

The Twentieth Century Reanalysis version 2c (20CRv2c) provides data every 6-hours on a 2-degree grid, for the past 150+ years. So this is consistent data, at a manageable volume, for a long period. (I have used 20CRv2c ensemble member 1 data from 1969-2005 as training data, and 2006-on as validation).

I chose to look at 4 near-surface variables: 2-meter air temperature, mean-sea-level-presure, meridional wind and zonal wind. (Precipitation presents too many complications for a first attempt, so I did not use it here).

I’m going to use the Tensorflow platform to build my ML models (an arbitrary choice), so I need to convert the 20CRv2c data from netCDF into tensors.

20CRv2c is a low resolution analysis (2x2 degree), and I’m using only four variables, but even so it has a state vector with 180*90*4=64,800 dimensions. Directly generating forecasts in such a high-dimensional space will be difficult to do reliably, so the first thing to do is dimension reduction. I need to build an encoder-decoder model, specifically, an autoencoder. This creates a compressed representation of the large state vector in terms of a small number of ‘features’, and I expect working in the resulting feature representation to be much easier than working in the full state space. After some experimentation I ended up with a variational autoencoder with eight convolutional layers, encoding the features as a 100-dimensional latent space.

This autoencoder was very successful in building a low-dimensional representation of the 20CRv2c data:

But the real virtue of the 100-dimensional latent space encoding is that it allows me to use the ‘generator’ half of the autoencoder as a generative model: it provides a method for making new, internally consistent, weather states.

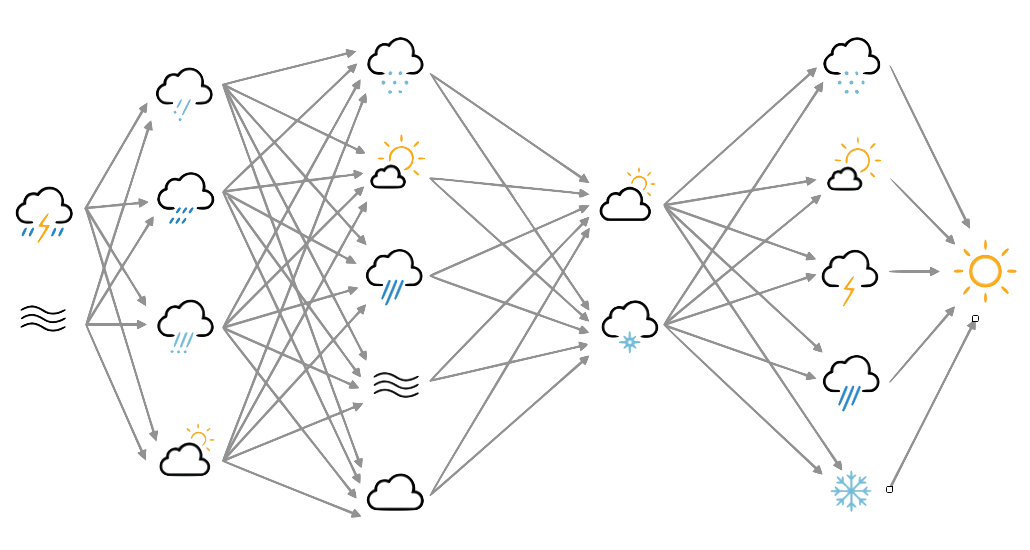

The key virtue of a GCM is that it can produce new, hypothetical, atmospheric states which are plausible representations of reality. The autoencoder generator has the same property. So I can convert the autoencoder into a predictor:

And I can then use the predictor as a GCM, by repeatedly re-using its output as its input:

This system produces reasonable temperature, pressure, and wind, using only machine learning - no physics, no dynamics, no chemistry. It’s well short of the state of the art for conventional GCMs, but the model was very quick and easy to produce: the model specification is only a few dozen lines of Python, trained in 20 minutes on my laptop, and it runs at more than 100,000 times the speed of the conventional GCM it was trained on.

As a proof-of-concept this is a success: It is possible to build useful General Circulation Models using just conventional machine learning tools. Such models are enormously faster (to build and to run) than conventional physics-based GCMs; it is likely they will go on to become very widely used.

This document and the data associated with it are crown copyright (2019) and licensed under the terms of the Open Government Licence. All code included is licensed under the terms of the GNU Lesser General Public License.