Get data sample for HadCRUT-EUSTACE comparison¶

It’s important to take a sample from the difference field, rather than differencing the two samples. So we take a field from both datasets at the same time, put them on the same grid, take the difference and extract a sample. That process can be slow (and take a lot of memory), so we’ll do it in one year batches:

#!/usr/bin/env python

# Make an latitude slice comparing Eustace and HadCRUT5 - for a given month,

# sampling across longitude and ensemble.

# Actually, do this for every month in a year - makes a more

# reasonable unit of work

import os

import iris

import numpy

import pickle

import argparse

parser = argparse.ArgumentParser()

parser.add_argument("--year", help="Year",

type=int,required=True)

parser.add_argument("--opdir", help="Directory for output files",

default="%s/EUSTACE/derived/HadCRUT5_comparison" % \

os.getenv('SCRATCH'),

type=str,required=False)

args = parser.parse_args()

if not os.path.isdir(args.opdir):

os.makedirs(args.opdir)

# Fix dask SPICE bug

import dask

dask.config.set(scheduler='single-threaded')

for month in range(1,13):

# Load the Eustace monthly normals

n=iris.load_cube("%s/EUSTACE/1.0/monthly/climatology_1961_1990/%02d.nc" %

(os.getenv('SCRATCH'),month),

iris.Constraint(cube_func=(lambda cell: cell.var_name == 'tas')))

# Array to store the sample in

ndata=numpy.ma.array(numpy.zeros((1,720)),mask=False)

# Load the ensemble (and anomalise)

e=[]

for member in range(10):

m=iris.load_cube("%s/EUSTACE/1.0/monthly/%04d/%02d.nc" %

(os.getenv('SCRATCH'),args.year,month),

iris.Constraint(cube_func=(lambda cell: \

cell.var_name == 'tasensemble_%d' % member)))

m = m-n # to anomaly

e.append(m)

# Load the HadCRUT5 ensemble and regrid to match EUSTACE

members = (1,2,3,4,5,56,57,58,59,60)

h=[]

for member in members:

m = iris.load_cube("/scratch/hadcc/hadcrut5/build/HadCRUT5/analysis/"+

"HadCRUT.5.0.0.0.analysis.anomalies.%d.nc" % member,

iris.Constraint(time=lambda cell: \

cell.point.year==args.year and cell.point.month==month))

m = m.regrid(e[0],iris.analysis.Nearest())

h.append(m)

# Make the slice

for lat in range(720):

m_e = numpy.random.randint(10)

m_h = numpy.random.randint(10)

rand_l = numpy.random.randint(0,1440)

ndata[0,lat]=h[m_h].data[lat,rand_l]-e[m_e].data[0,lat,rand_l]

# Store

dfile = "%s/%04d%02d.pkl" % (args.opdir,args.year,month)

pickle.dump( ndata, open( dfile, "wb" ) )

Script to make the sample batch for each year:

#!/usr/bin/env python

# Scripts to make slices for every month

import os

import datetime

for year in range (1850,2016):

print("./make_comparison_slice.py --year=%d" % year )

Script to combine the annual batches into a complete sample:

#!/usr/bin/env python

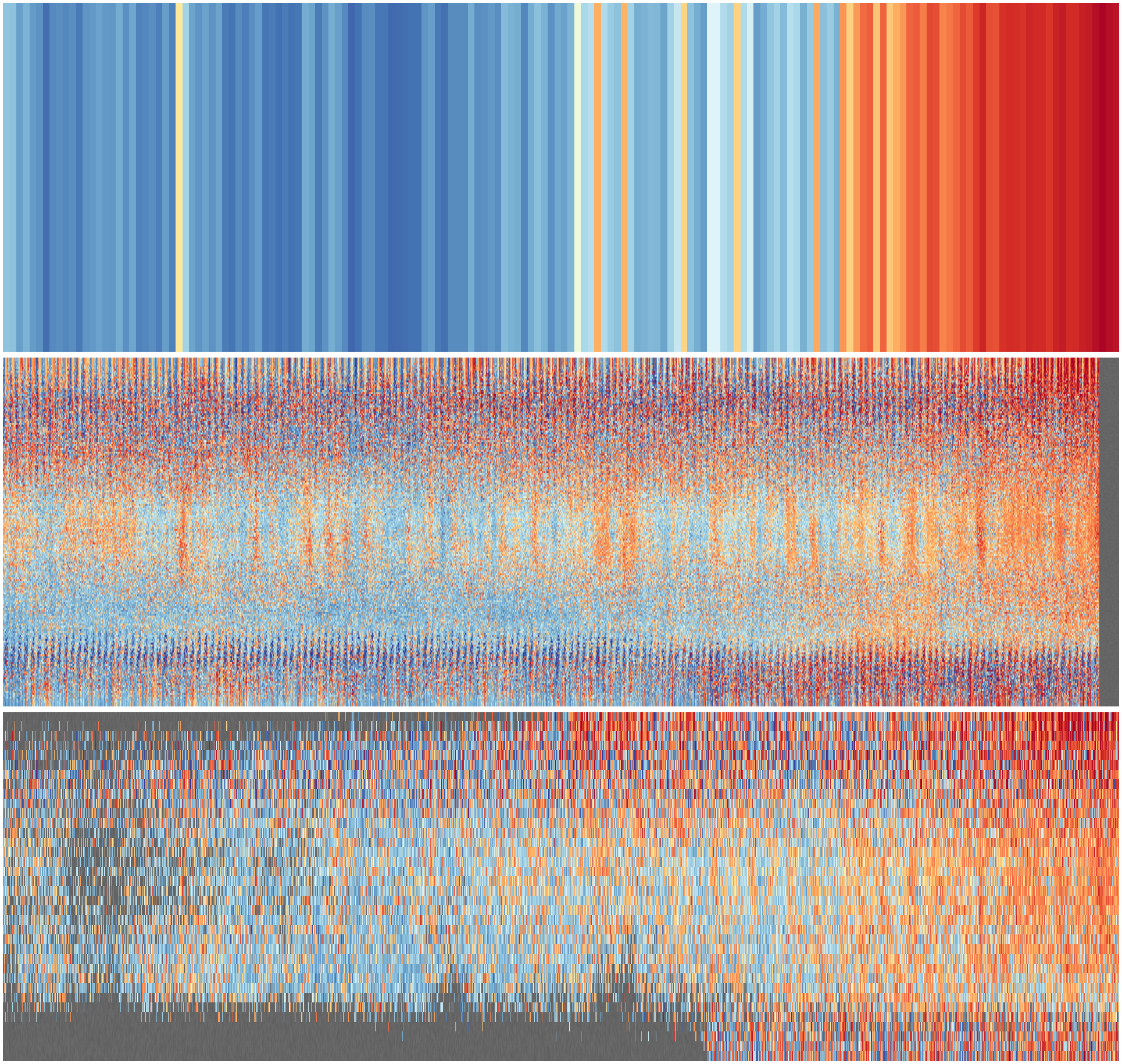

# Get the sample cube for HadCRUT5-EUSTACE comparison

# Monthly, resolved in latitude, sampling in longitude, sampling the ensembles.

# Delegates making the slices to the parallelisable make_slice script.

# This script only puts the slices together.

import os

import iris

import numpy

import datetime

import pickle

start=datetime.datetime(1851,1,1,0,0)

end=datetime.datetime(2018,12,31,23,59)

def get_sample_cube(start=datetime.datetime(1851,1,1,0,0),

end=datetime.datetime(2018,12,31,23,59)):

slice_dir = "%s/EUSTACE/derived/HadCRUT5_comparison" % \

os.getenv('SCRATCH')

# Array to store the sample in

ndata=numpy.ma.array(numpy.zeros(((2016-1850)*12,720)),mask=False)

dts=[]

# Assemble the sample slice by slice

for year in range(1850,2016):

for month in range(1,13):

t=(year-1850)*12+month-1

dfile = "%s/%04d%02d.pkl" % (slice_dir,year,month)

with open(dfile, "rb") as f:

dslice = pickle.load(f)

ndata[t,:]=dslice[0,:]

dts.append(datetime.datetime(year,month,15))

return (ndata,dts)

(ndata,dts) = get_sample_cube(start,end)

pickle.dump( (ndata,dts), open( "comparison.pkl", "wb" ) )