Video diagnostics of model training¶

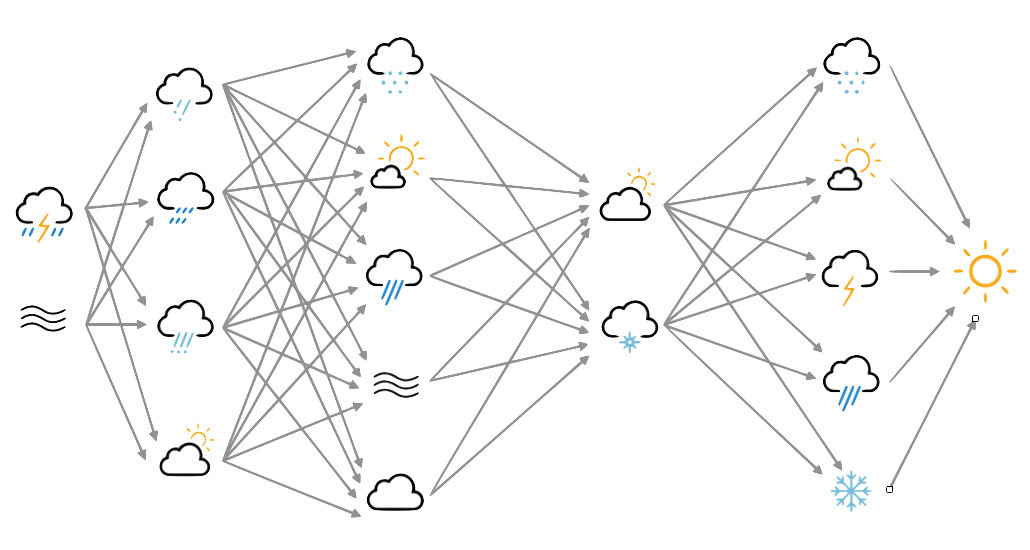

On the left, the model weights: The boxplot in the centre shows the weights associated with each neuron in the hidden layer, arranged in order, largest to smallest. Negative weights have been converted to positive (and the sign of the associated output layer weights switched accordingly). The colourmaps on top are the weights, for each hidden layer neuron, for each input field location (so a lat:lon map). They are aranged in the same order as the hidden layer weights (so if hidden-layer neuron 3 has the largest weight, the input layer weights for neuron 3 are shown at top left). The colourmaps on the bottom are the output layer weights, arranged in the same way.

Top right, a sample pressure field: Original in red, after passing through the autoencoder in blue.

Bottom right, training progress: Loss v. no. of training epochs (but note that one epoch here is different (fewer training fields) than one epoch in the other examples).

That means saving the model state after each epoch, and having more, shorter epochs - we can modify the autoencoder script to do this.

#!/usr/bin/env python

# Very simple autoencoder for 20CR prmsl fields.

# Single, fully-connected layer as encoder+decoder, 32 neurons.

# Very unlikely to work well at all, but this isn't about good

# results, it's about getting started.

#

# This version concentrates on tracking the training of the autoencoder

# so small batches/epochs and save the state at every point.

import os

import tensorflow as tf

#tf.enable_eager_execution()

from tensorflow.data import Dataset

from glob import glob

import numpy

import pickle

# How much to do between output points

epoch_size=100

# How much training in total

n_epochs=1000

# File names for the serialised tensors to train on

input_file_dir=("%s/Machine-Learning-experiments/datasets/20CR2c/prmsl/training/" %

os.getenv('SCRATCH'))

training_files=glob("%s/*.tfd" % input_file_dir)

n_tf=len(training_files)

train_tfd = tf.constant(training_files)

# Create TensorFlow Dataset object from the file names

tr_data = Dataset.from_tensor_slices(train_tfd)

# Repeat the input data enough times that we don't run out

n_reps=(epoch_size*n_epochs)//n_tf +1

tr_data = tr_data.repeat(n_reps)

# We don't want the file names, we want their contents, so

# add a map to convert from names to contents.

def load_tensor(file_name):

sict=tf.read_file(file_name) # serialised

ict=tf.parse_tensor(sict,numpy.float32)

return ict

tr_data = tr_data.map(load_tensor)

# Also need to reshape the data to linear, and produce a tuple

# (source,target) for model

def to_model(ict):

ict=tf.reshape(ict,[1,91*180])

return(ict,ict)

tr_data = tr_data.map(to_model)

# Input placeholder - treat data as 1d

original = tf.keras.layers.Input(shape=(91*180,))

# Encoding layer 32-neuron fully-connected

encoded = tf.keras.layers.Dense(32, activation='tanh')(original)

# Output layer - same shape as input

decoded = tf.keras.layers.Dense(91*180, activation='tanh')(encoded)

# Model relating original to output

autoencoder = tf.keras.models.Model(original, decoded)

# Choose a loss metric to minimise (RMS)

# and an optimiser to use (adadelta)

autoencoder.compile(optimizer='adadelta', loss='mean_squared_error')

# Set up a callback to save the model state

checkpoint = ("%s/Machine-Learning-experiments/"+

"simple_autoencoder_instrumented/"+

"saved_models/Epoch_{epoch:04d}") % os.getenv('SCRATCH')

if not os.path.isdir(os.path.dirname(checkpoint)):

os.makedirs(os.path.dirname(checkpoint))

cp_callback = tf.keras.callbacks.ModelCheckpoint(checkpoint,

save_weights_only=False,

verbose=1)

# Train the autoencoder - saving it every epoch

history=autoencoder.fit(x=tr_data, # Get (source,target) pairs from this Dataset

epochs=n_epochs,

steps_per_epoch=epoch_size,

callbacks = [cp_callback],

verbose=2) # One line per epoch

# Save the training history

history_file=("%s/Machine-Learning-experiments/"+

"simple_autoencoder_instrumented/"+

"saved_models/history_to_%04d.pkl") % (

os.getenv('SCRATCH'),n_epochs)

pickle.dump(history.history, open(history_file, "wb"))

Then we need a script to make a summary plot at each epoch:

#!/usr/bin/env python

# General model quality plot

# Can be run at any epoch - for video diagnoistics.

import tensorflow as tf

tf.enable_eager_execution()

import numpy

import IRData.twcr as twcr

import iris

import datetime

import argparse

import os

import math

import pickle

import Meteorographica as mg

import matplotlib

from matplotlib.backends.backend_agg import FigureCanvasAgg as FigureCanvas

from matplotlib.figure import Figure

import cartopy

import cartopy.crs as ccrs

import argparse

parser = argparse.ArgumentParser()

parser.add_argument("--epoch", help="Model at which epoch?",

type=int,required=True)

args = parser.parse_args()

# Get the 20CR data

ic=twcr.load('prmsl',datetime.datetime(2009,3,12,6),

version='2c')

ic=ic.extract(iris.Constraint(member=1))

# Get the autoencoder at 1000 epochs

model_save_file = ("%s/Machine-Learning-experiments/"+

"simple_autoencoder_instrumented/"+

"saved_models/Epoch_%04d") % (

os.getenv('SCRATCH'),1000)

autoencoder=tf.keras.models.load_model(model_save_file)

# Get the order of the hidden weights - most to least important

order=numpy.argsort(numpy.abs(autoencoder.get_weights()[1]))[::-1]

# Get the largest converged hidden weight

hw_max=numpy.max(numpy.abs(autoencoder.get_weights()[1]))

# Get the autoencoder at the chosen epoch

model_save_file = ("%s/Machine-Learning-experiments/"+

"simple_autoencoder_instrumented/"+

"saved_models/Epoch_%04d") % (

os.getenv('SCRATCH'),args.epoch)

autoencoder=tf.keras.models.load_model(model_save_file)

# Normalisation - Pa to mean=0, sd=1 - and back

def normalise(x):

x -= 101325

x /= 3000

return x

def unnormalise(x):

x *= 3000

x += 101325

return x

fig=Figure(figsize=(19.2,10.8), # 1920x1080, HD

dpi=100,

facecolor=(0.88,0.88,0.88,1),

edgecolor=None,

linewidth=0.0,

frameon=False,

subplotpars=None,

tight_layout=None)

canvas=FigureCanvas(fig)

# Top right - map showing original and reconstructed fields

projection=ccrs.RotatedPole(pole_longitude=180.0, pole_latitude=90.0)

ax_map=fig.add_axes([0.505,0.51,0.475,0.47],projection=projection)

ax_map.set_axis_off()

extent=[-180,180,-90,90]

ax_map.set_extent(extent, crs=projection)

matplotlib.rc('image',aspect='auto')

# Run the data through the autoencoder and convert back to iris cube

pm=ic.copy()

pm.data=normalise(pm.data)

ict=tf.convert_to_tensor(pm.data, numpy.float32)

ict=tf.reshape(ict,[1,91*180]) # ????

result=autoencoder.predict_on_batch(ict)

result=tf.reshape(result,[91,180])

pm.data=unnormalise(result)

# Background, grid and land

ax_map.background_patch.set_facecolor((0.88,0.88,0.88,1))

#mg.background.add_grid(ax_map)

land_img_orig=ax_map.background_img(name='GreyT', resolution='low')

# original pressures as red contours

mg.pressure.plot(ax_map,ic,

scale=0.01,

resolution=0.25,

levels=numpy.arange(870,1050,7),

colors='red',

label=False,

linewidths=1)

# Encoded pressures as blue contours

mg.pressure.plot(ax_map,pm,

scale=0.01,

resolution=0.25,

levels=numpy.arange(870,1050,7),

colors='blue',

label=False,

linewidths=1)

mg.utils.plot_label(ax_map,

'%04d-%02d-%02d:%02d' % (2009,3,12,6),

facecolor=(0.88,0.88,0.88,0.9),

fontsize=8,

x_fraction=0.98,

y_fraction=0.03,

verticalalignment='bottom',

horizontalalignment='right')

# Add the model weights on the left

# Where on the plot to put each axes

def axes_geom(layer=0,channel=0,nchannels=36):

if layer==0:

base=[0.0,0.6,0.5,0.4]

else:

base=[0.0,0.0,0.5,0.4]

ncol=math.sqrt(nchannels)

nr=channel//ncol

nc=channel-ncol*nr

nr=ncol-1-nr # Top down

geom=[base[0]+(base[2]/ncol)*0.95*nc,

base[1]+(base[3]/ncol)*0.95*nr,

(base[2]/ncol)*0.95,

(base[3]/ncol)*0.95]

geom[0] += (0.05*base[2]/(ncol+1))*(nc+1)

geom[1] += (0.05*base[3]/(ncol+1))*(nr+1)

return geom

# Plot a single set of weights

def plot_weights(weights,layer=0,channel=0,nchannels=36,

vmin=None,vmax=None):

ax_input=fig.add_axes(axes_geom(layer=layer,

channel=channel,

nchannels=nchannels),

projection=projection)

ax_input.set_axis_off()

ax_input.set_extent(extent, crs=projection)

ax_input.background_patch.set_facecolor((0.88,0.88,0.88,1))

lats = w_in.coord('latitude').points

lons = w_in.coord('longitude').points-180

prate_img=ax_input.pcolorfast(lons, lats, w_in.data,

cmap='coolwarm',

vmin=vmin,

vmax=vmax,

)

# Plot the hidden layer weights

def plot_hidden(weights):

# Single axes - var v. time

ax=fig.add_axes([0.05,0.425,0.425,0.15])

# Axes ranges from data

ax.set_xlim(-0.6,len(weights)-0.4)

ax.set_ylim(0,hw_max*1.05)

ax.bar(x=range(len(weights)),

height=numpy.abs(weights[order]),

color='grey',

tick_label=order)

plot_hidden(autoencoder.get_weights()[1])

for layer in [0,2]:

w_l=autoencoder.get_weights()[layer]

vmin=numpy.mean(w_l)-numpy.std(w_l)*3

vmax=numpy.mean(w_l)+numpy.std(w_l)*3

count=0

for channel in order:

w_in=ic.copy()

if layer==0:

w_in.data=w_l[:,channel].reshape(ic.data.shape)

else:

w_in.data=w_l[channel,:].reshape(ic.data.shape)

w_in.data *= numpy.sign(autoencoder.get_weights()[1][channel])

plot_weights(w_in,layer=layer,channel=count,nchannels=36,

vmin=vmin,vmax=vmax)

count += 1

# Scatterplot of encoded v original

ax=fig.add_axes([0.54,0.05,0.225,0.4])

aspect=.225/.4*16/9

# Axes ranges from data

dmin=min(ic.data.min(),pm.data.min())

dmax=max(ic.data.max(),pm.data.max())

dmean=(dmin+dmax)/2

dmax=dmean+(dmax-dmean)*1.05

dmin=dmean-(dmean-dmin)*1.05

if aspect<1:

ax.set_xlim(dmin/100,dmax/100)

ax.set_ylim((dmean-(dmean-dmin)*aspect)/100,

(dmean+(dmax-dmean)*aspect)/100)

else:

ax.set_ylim(dmin/100,dmax/100)

ax.set_xlim((dmean-(dmean-dmin)*aspect)/100,

(dmean+(dmax-dmean)*aspect)/100)

ax.scatter(x=pm.data.flatten()/100,

y=ic.data.flatten()/100,

c='black',

alpha=0.25,

marker='.',

s=2)

ax.set(ylabel='Original',

xlabel='Encoded')

ax.grid(color='black',

alpha=0.2,

linestyle='-',

linewidth=0.5)

# Plot the training history

history_save_file=("%s/Machine-Learning-experiments/"+

"simple_autoencoder_instrumented/"+

"saved_models/history_to_%04d.pkl") % (

os.getenv('SCRATCH'),1000)

history=pickle.load( open( history_save_file, "rb" ) )

ax=fig.add_axes([0.82,0.05,0.155,0.4])

# Axes ranges from data

ax.set_xlim(0,len(history['loss']))

ax.set_ylim(0,numpy.max(history['loss']))

ax.set(xlabel='Epochs of training',

ylabel='Loss')

ax.grid(color='black',

alpha=0.2,

linestyle='-',

linewidth=0.5)

ax.plot(range(len(history['loss'][0:args.epoch])),

history['loss'][0:args.epoch],

color='grey',

linestyle='-',

linewidth=2)

# Render the figure as a png

figfile=("%s/Machine-Learning-experiments/"+

"simple_autoencoder_instrumented/"+

"images/comparison_%04d.png") % (

os.getenv('SCRATCH'),args.epoch)

if not os.path.isdir(os.path.dirname(figfile)):

os.makedirs(os.path.dirname(figfile))

fig.savefig(figfile)

To make the video, it is necessary to run the script above hundreds of times - giving an image after each epoch of training. This script makes the list of commands needed to make all the images, which can be run in parallel.

#!/usr/bin/env python

# Make a comparison plot for each epoch 1-1000

# Actually make a list of commands to do that,

# which can then be run in parallel.

import os

import subprocess

import datetime

# Where to put the output files

opdir="%s/slurm_output" % os.getenv('SCRATCH')

if not os.path.isdir(opdir):

os.makedirs(opdir)

# Function to check if the job is already done for this epoch

def is_done(epoch):

op_file_name=("%s/Machine-Learning-experiments/"+

"simple_autoencoder_instrumented/"+

"images/comparison_%04d.png") % (

os.getenv('SCRATCH'),epoch)

if os.path.isfile(op_file_name):

return True

return False

f=open("run.txt","w+")

epoch=1

while epoch<=1000:

if is_done(epoch):

epoch=epoch+1

continue

cmd="./compare_full.py --epoch=%d \n" % epoch

f.write(cmd)

epoch=epoch+1

f.close()

To turn the thousands of images into a movie, use ffmpeg

#!/bin/bash

ffmpeg -r 12 -pattern_type glob -i /scratch/hadpb/Machine-Learning-experiments/simple_autoencoder_instrumented/images/comparison_\*.png -c:v libx264 -preset slow -tune animation -profile:v high -level 4.2 -pix_fmt yuv420p -crf 25 -c:a copy full.mp4