Oldstyle Assimilation with precipitation observations¶

The oldstyle assimilation makes no assumptions about what the observations to be assimilated are: It will take anything as long as it is possible to infer the MSLP output field from them. So we should be able to use exactly the same method (and code) to assimilate (pseudo-)observations of precipitation (rather than MSLP).

Then we can train a model to make an MSLP field from the pseudo-observations. Exactly the same code as for the MSLP observations version (except for the data source and the normalisation).

#!/usr/bin/env python

# Estimate prmsl fields from (simulated) observations with the coverage of

# 20CRv2c obs in March 1916.

# Use observations of prate.

import os

import tensorflow as tf

#tf.enable_eager_execution()

from tensorflow.data import Dataset

import ML_Utilities

import pickle

import numpy

from glob import glob

# How many epochs to train for

n_epochs=50

def normalise(ic):

ic = tf.math.sqrt(ic)

ic *= 100

return ic

# File names for the serialised tensors to train on

input_file_dir=(("%s/Machine-Learning-experiments/datasets/"+

"20CR2c/prmsl/training/") %

os.getenv('SCRATCH'))

training_files=glob("%s/*.tfd" % input_file_dir)

n_steps=len(training_files)

train_tfd = tf.constant(training_files)

# Create TensorFlow Dataset object from the file names

field_data = Dataset.from_tensor_slices(train_tfd)

# We don't want the file names, we want their contents, so

# add a map to convert from names to contents.

def load_tensor(file_name):

sict=tf.read_file(file_name) # serialised

ict=tf.parse_tensor(sict,numpy.float32)

ict=tf.reshape(ict,[91,180,1])

return ict

field_data = field_data.map(load_tensor)

# Use all the data once each epoch

field_data = field_data.repeat(n_epochs)

field_data = field_data.batch(1)

# Same with the test dataset

input_file_dir=(("%s/Machine-Learning-experiments/datasets/"+

"20CR2c/prmsl/test/") %

os.getenv('SCRATCH'))

test_files=glob("%s/*.tfd" % input_file_dir)

test_steps=len(test_files)

test_tfd = tf.constant(test_files)

field_test_data = Dataset.from_tensor_slices(test_tfd)

field_test_data = field_test_data.map(load_tensor)

field_test_data = field_test_data.repeat(n_epochs)

field_test_data = field_test_data.batch(1)

# That's the targets - now make a matching dataset of the

# station observations.

# Use the file names from the field files, but repoint them;

# also adjust to use the training/test split from the field files

def load_observations(file_name):

# Get the ob tensor file name from the field tensor file name

file_name=tf.strings.regex_replace(file_name,

'prmsl','prate')

file_name=tf.strings.regex_replace(file_name,

'datasets/','datasets/obs_1916/')

file_name=tf.strings.regex_replace(file_name,

'test/','training/')

file_name=tf.strings.regex_replace(file_name,

'training/2009','test/2009')

sict=tf.read_file(file_name) # serialised

ict=tf.parse_tensor(sict,numpy.float32)

ict=normalise(ict)

ict=tf.reshape(ict,[488,])

return ict

obs_data = Dataset.from_tensor_slices(train_tfd)

obs_data = obs_data.repeat(n_epochs)

obs_data = obs_data.map(load_observations)

obs_data = obs_data.batch(1)

# And the test observations

obs_test_data = Dataset.from_tensor_slices(test_tfd)

obs_test_data = obs_test_data.repeat(n_epochs)

obs_test_data = obs_test_data.map(load_observations)

obs_test_data = obs_test_data.batch(1)

# Zip the target and source together for training

training_data = Dataset.zip((obs_data, field_data))

test_data = Dataset.zip((obs_test_data, field_test_data))

# Need to resize data so it's dimensions are a multiple of 8 (3*2-fold pool)

class ResizeLayer(tf.keras.layers.Layer):

def __init__(self, newsize=None, **kwargs):

super(ResizeLayer, self).__init__(**kwargs)

self.resize_newsize = newsize

def call(self, input):

return tf.image.resize_images(input, self.resize_newsize,

align_corners=True)

def get_config(self):

return {'newsize': self.resize_newsize}

# Build the model:

# obs->dense network->convolutional network->field

# Input placeholder

original = tf.keras.layers.Input(shape=(488,))

x = tf.keras.layers.Dropout(0.9)(original)

# Obs processing layers

x = tf.keras.layers.Dense(512)(original)

x = tf.keras.layers.LeakyReLU()(x)

x = tf.keras.layers.Dropout(0.5)(x)

x = tf.keras.layers.Dense(512)(x)

x = tf.keras.layers.LeakyReLU()(x)

x = tf.keras.layers.Dropout(0.5)(x)

# Need to make a 10x20x8 layer for decoding

x = tf.keras.layers.Dense(1600,

activity_regularizer=tf.keras.regularizers.l1(10e-5))(x)

x = tf.keras.layers.LeakyReLU()(x)

encoded = tf.keras.layers.Reshape(target_shape=(10,20,8,))(x)

# Decoding layers

x = tf.keras.layers.Conv2D(8, (3, 3), padding='same')(encoded)

x = tf.keras.layers.LeakyReLU()(x)

x = tf.keras.layers.UpSampling2D((2, 2))(x)

x = tf.keras.layers.Conv2D(8, (3, 3), padding='same')(x)

x = tf.keras.layers.LeakyReLU()(x)

x = tf.keras.layers.UpSampling2D((2, 2))(x)

x = tf.keras.layers.Conv2D(16, (3, 3), padding='same')(x)

x = tf.keras.layers.LeakyReLU()(x)

x = tf.keras.layers.UpSampling2D((2, 2))(x)

decoded = tf.keras.layers.Conv2D(1, (3, 3), padding='same')(x)

resized=ResizeLayer(newsize=(91,180))(decoded)

# Model relating observations to field

autoencoder = tf.keras.models.Model(original,resized)

# Choose a loss metric to minimise (RMS)

# and an optimiser to use (adadelta)

autoencoder.compile(optimizer='adadelta', loss='mean_squared_error')

# Train the autoencoder

history=autoencoder.fit(x=training_data,

epochs=n_epochs,

steps_per_epoch=n_steps,

validation_data=test_data,

validation_steps=test_steps,

verbose=2) # One line per epoch

# Save the model

save_file=("%s/Machine-Learning-experiments/"+

"oldstyle_assimilation_1916_prate/"+

"saved_models/Epoch_%04d") % (

os.getenv('SCRATCH'),n_epochs)

if not os.path.isdir(os.path.dirname(save_file)):

os.makedirs(os.path.dirname(save_file))

tf.keras.models.save_model(autoencoder,save_file)

history_file=("%s/Machine-Learning-experiments/"+

"oldstyle_assimilation_1916_prate/"+

"saved_models/history_to_%04d.pkl") % (

os.getenv('SCRATCH'),n_epochs)

pickle.dump(history.history, open(history_file, "wb"))

This actually works. The reconstruction is nothing like as good as with pressure observations, but that’s as expected - there’s much less information about the pressure field in precipitation observations than pressure observations.

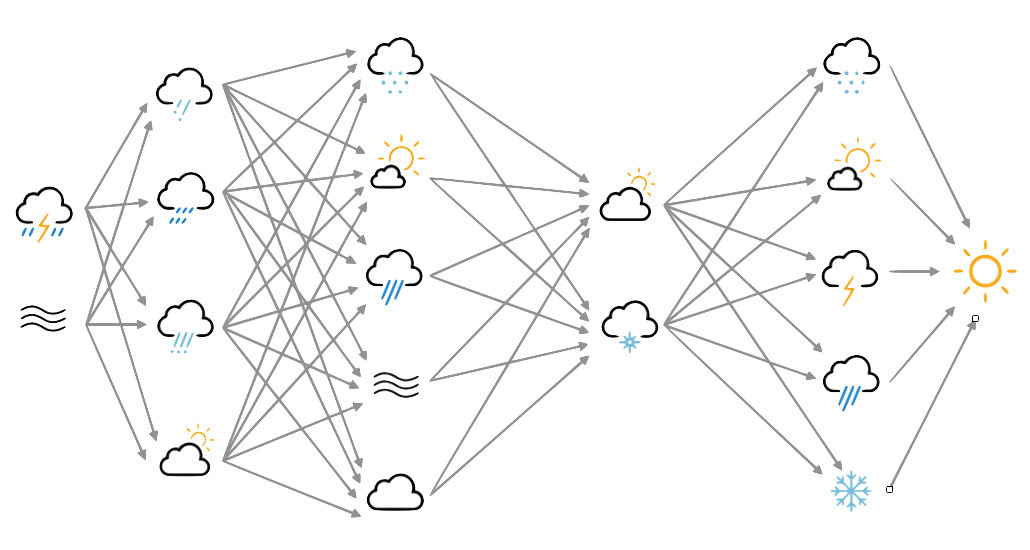

Top, a sample pressure field: Target in red, as reconstructed from precipitation observations (black dots) in blue. Bottom, a scatterplot of target v. reconstructed pressures for the sample field, and a graph of training progress: Loss v. no. of training epochs.

Script to make the figure¶

#!/usr/bin/env python

# Model training results plot

import tensorflow as tf

tf.enable_eager_execution()

import numpy

import IRData.twcr as twcr

import iris

import datetime

import argparse

import os

import math

import pickle

import Meteorographica as mg

import matplotlib

from matplotlib.backends.backend_agg import FigureCanvasAgg as FigureCanvas

from matplotlib.figure import Figure

import cartopy

import cartopy.crs as ccrs

# Normalisation - Pa to mean=0, sd=1 - and back

def normalise(x):

x -= 101325

x /= 3000

return x

def unnormalise(x):

x *= 3000

x += 101325

return x

def normalise_prate(x):

x = tf.math.sqrt(x)

x *= 100

return x

class ResizeLayer(tf.keras.layers.Layer):

def __init__(self, newsize=None, **kwargs):

super(ResizeLayer, self).__init__(**kwargs)

self.resize_newsize = newsize

def call(self, input):

return tf.image.resize_images(input, self.resize_newsize,

align_corners=True)

def get_config(self):

return {'newsize': self.resize_newsize}

year=1916

month=3

day=12

hour=6

# Get the 20CR data

#ic=twcr.load('prmsl',datetime.datetime(2009,3,12,18),

ic=twcr.load('prmsl',datetime.datetime(year,month,day,hour),

version='2c')

ic=ic.extract(iris.Constraint(member=1))

#ic=ic.collapsed('member', iris.analysis.MEAN)

icp=twcr.load('prate',datetime.datetime(year,month,day,hour),

version='2c')

icp=icp.extract(iris.Constraint(member=1))

# Make the fake observations

obs=twcr.load_observations_fortime(datetime.datetime(1916,3,12,6),

version='2c')

ensemble=[]

for index, row in obs.iterrows():

ensemble.append(icp.interpolate(

[('latitude',row['Latitude']),

('longitude',row['Longitude'])],

iris.analysis.Linear()).data.item())

ensemble = numpy.array(ensemble, dtype=numpy.float32)

ensemble = normalise_prate(ensemble)

obs_t = tf.convert_to_tensor(ensemble, numpy.float32)

obs_t = tf.reshape(obs_t,[1,488])

# Get the assimilation model

model_save_file=("%s/Machine-Learning-experiments/"+

"oldstyle_assimilation_1916_prate/"+

"saved_models/Epoch_%04d") % (

os.getenv('SCRATCH'),50)

autoencoder=tf.keras.models.load_model(model_save_file,

custom_objects={'ResizeLayer': ResizeLayer})

fig=Figure(figsize=(9.6,10.8), # 1/2HD

dpi=100,

facecolor=(0.88,0.88,0.88,1),

edgecolor=None,

linewidth=0.0,

frameon=False,

subplotpars=None,

tight_layout=None)

canvas=FigureCanvas(fig)

# Top - map showing original and reconstructed fields

# Plot the locations of the stations

projection=ccrs.RotatedPole(pole_longitude=180.0, pole_latitude=90.0)

ax_map=fig.add_axes([0.01,0.51,0.98,0.48],projection=projection)

ax_map.set_axis_off()

extent=[-180,180,-90,90]

ax_map.set_extent(extent, crs=projection)

matplotlib.rc('image',aspect='auto')

# Run the fake through the assimilator and produce an iris cube

pm = ic.copy()

result=autoencoder.predict_on_batch(obs_t)

result=tf.reshape(result,[91,180])

pm.data=unnormalise(result)

# Background, grid and land

ax_map.background_patch.set_facecolor((0.88,0.88,0.88,1))

#mg.background.add_grid(ax_map)

land_img_orig=ax_map.background_img(name='GreyT', resolution='low')

# Station locations

mg.observations.plot(ax_map,obs,radius=0.5,

facecolor='black')

# original pressures as red contours

mg.pressure.plot(ax_map,ic,

scale=0.01,

resolution=0.25,

levels=numpy.arange(870,1050,7),

colors='red',

label=False,

linewidths=1)

# Encoded pressures as blue contours

mg.pressure.plot(ax_map,pm,

scale=0.01,

resolution=0.25,

levels=numpy.arange(870,1050,7),

colors='blue',

label=False,

linewidths=1)

mg.utils.plot_label(ax_map,

'%04d-%02d-%02d:%02d' % (year,month,day,hour),

facecolor=(0.88,0.88,0.88,0.9),

fontsize=8,

x_fraction=0.98,

y_fraction=0.03,

verticalalignment='bottom',

horizontalalignment='right')

# Scatterplot of encoded v original

ax=fig.add_axes([0.08,0.05,0.45,0.4])

aspect=.225/.4*16/9

# Axes ranges from data

dmin=min(ic.data.min(),pm.data.min())

dmax=max(ic.data.max(),pm.data.max())

dmean=(dmin+dmax)/2

dmax=dmean+(dmax-dmean)*1.05

dmin=dmean-(dmean-dmin)*1.05

if aspect<1:

ax.set_xlim(dmin/100,dmax/100)

ax.set_ylim((dmean-(dmean-dmin)*aspect)/100,

(dmean+(dmax-dmean)*aspect)/100)

else:

ax.set_ylim(dmin/100,dmax/100)

ax.set_xlim((dmean-(dmean-dmin)*aspect)/100,

(dmean+(dmax-dmean)*aspect)/100)

ax.scatter(x=pm.data.flatten()/100,

y=ic.data.flatten()/100,

c='black',

alpha=0.5,

marker='.',

s=3)

ax.set(ylabel='20CR2c',

xlabel='Constructed')

ax.grid(color='black',

alpha=0.2,

linestyle='-',

linewidth=0.5)

# Plot the training history

history_save_file=("%s/Machine-Learning-experiments/"+

"oldstyle_assimilation_1916_prate/"+

"saved_models/history_to_%04d.pkl") % (

os.getenv('SCRATCH'),50)

history=pickle.load( open( history_save_file, "rb" ) )

ax=fig.add_axes([0.62,0.05,0.35,0.4])

# Axes ranges from data

ax.set_xlim(0,len(history['loss']))

ax.set_ylim(0,numpy.max(numpy.concatenate((history['loss'],

history['val_loss']))))

ax.set(xlabel='Epochs',

ylabel='Loss (grey) and validation loss (black)')

ax.grid(color='black',

alpha=0.2,

linestyle='-',

linewidth=0.5)

ax.plot(range(len(history['loss'])),

history['loss'],

color='grey',

linestyle='-',

linewidth=2)

ax.plot(range(len(history['val_loss'])),

history['val_loss'],

color='black',

linestyle='-',

linewidth=2)

# Render the figure as a png

fig.savefig("comparison_results.png")